Controlling the state of appsink pipeline depending on its RTSP appsrc clients

Controlling the state of appsink pipeline depending on its RTSP appsrc clients

|

I have a hierarchy like below:

caps = "video/x-raw,width=640,height=512,format=GRAY8" -SourcePipeline ---GstElement pipeline(has a videotestsrc and appsink) ---a GstAppSrc pointer array to push samples -GstRTSPServer ---GstRTSPMediaFactory (has a GstAppSrc named "appsrc0" and mounted on "/test") ---GstRTSPMediaFactory (has a GstAppSrc named "appsrc1" and mounted on "/test2") So, on media factories I listen for media-constructed signals and register appsrc pointers to source pipeline. Also when their media changes state to GST_STATE_NULL i remove the pointer from appsrc array in SourcePipeline. On the source pipeline, appsink pushes the samples to appsrc one by one. When there are no appsrcs left on the array, pipeline's state is changed to GST_STATE_NULL until the first appsrc joins again. I have some questions and problems: 1. When 1st client connects to RTSP, the client instantly gets the stream. When the 2nd one joins on he 2nd mountpoint, stream pauses when GstRTSPMedia changes its state to GST_STATE_PLAYING, and after 5 - 6 seconds stream resumes again. This sometimes doesn't happen, though. The stream fails and I can't get it up again before restarting the program. 2. Is my approach of controlling SourcePipeline correct? How should I do it on an RTSP server? 3. I set appsrc's block property on TRUE. If I don't set it to true, It uses all the memory, until system becomes unresponsive. Again, what is the right approach here? 4. I am currently using push_sample to push buffers to appsrcs. What is the difference between push_sample and push_buffer? Which is more effective? 5. When 2 clients on different mountpoints are watching the stream, it breaks the stream down when 1 of them disconnects or stops the stream. I check new-state signal on GstRTSPMedia to know RTSP pipeline's states. Clearly this approach doesn't work, what is the correct one here? |

Re: Controlling the state of appsink pipeline depending on its RTSP appsrc clients

|

Playing with the system a little more I've some observations:

-For the first PLAY requests everything works as intended; second appsrc(different mountpoint) client can connect without any problems. -After one of the clients STOP or disconnect, reconnection process is always problematic. Say 2nd(also 2nd mountpoint) client disconnected and tries to connect again, it can't connect, but 1st stream goes on smoothly without any problems. -I changed the way sourcepipeline handles new appsrc clients. It changes state to NULL and then back to PLAYING when a new client is added. This seems to solve a client not being able to rejoin but this causes former client to hang for a random amount of time (from 10 seconds up to 1 minute) and this makes me think there's a problem with timing and latency. The problem is I don't know what to do after this point. |

Re: Controlling the state of appsink pipeline depending on its RTSP appsrc clients

|

Another update!

My last information about 2nd case(where I set source pipeline's state to NULL and then back to PLAYING) was wrong. First connected pipeline doesn't wait for a random amount of time, it waits till source pipeline's running time catches up with its old running time. So if 2nd client connects while 1st client had been playing for 25 seconds, first client drops buffers(not 100% sure about this) till timer is back to 0:25 then continues playing. And I am thinking the same could be happening for the case in which I don't set source pipeline to NULL. Second client waits for buffers with running time 0 (or close to 0) and since this is never going to happen, it finally gives up and disconnects. I am still in need of workarounds or ideas. I am going to try passing buffers instead of samples now and timestamp them according to their destination appsrc. |

Re: Controlling the state of appsink pipeline depending on its RTSP appsrc clients

|

I don't know if I should open up a new thread or keep posting updates on this one. My title is not appropriate for the question and this whole thread started to feel like a spam but anyway.

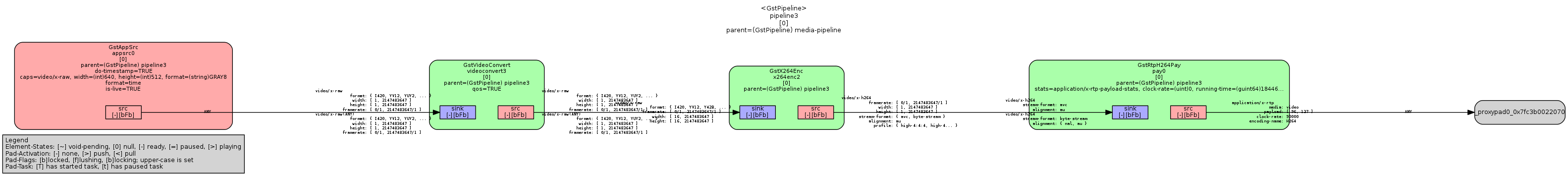

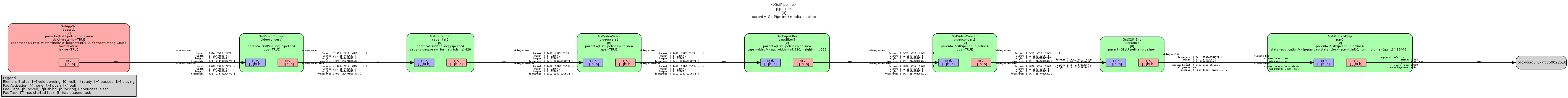

Here are my pipelines in case that may help anyone to hit me up with directions or ideas.

|

«

Return to GStreamer-devel

|

1 view|%1 views

| Free forum by Nabble | Edit this page |