Hi.... I have succeed to pull the data from tow live streamer pipeline using appsink's. Now I want to push the data to newly created pipeline to mix these two streaming buffers and store it into wave file. The new pipeline call recordpipeline that contains below elements.

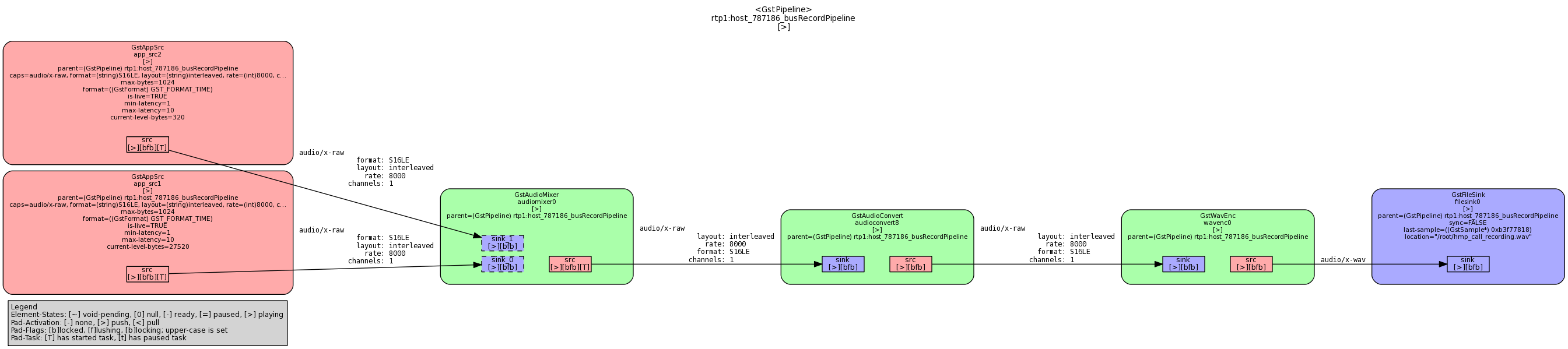

appsrc1->audiomixer->audioconverter->wavenc->filesink.

I did that successfully by pushing the buffer to appsrc1. But appsrc2 is always getting failed and I am able to store the audio that is coming from appsrc1. So resulting wave file is always containing only the buffer that's given by appsrc1. but I also want to merge the buffer that I am pushing it to appsrc2 element. Please refer attached record pipeline screen shot.